Multicam support in iOS 13 allows simultaneous video photo and audio capture 9to5Mac

In iOS 13, Apple is introducing multi-cam aid permitting apps to concurrently capture pics, video, audio, metadata, and depth from multiple microphones and cameras on a single iPhone or iPad.

The pleasant 4K & 5K shows for Mac

Apple has lengthy supported multi-camera capture on macOS considering the fact that OS X Lion, but up till now, hardware boundaries averted it from rolling out APIs for iPhones and iPads.

The new function and APIs in iOS thirteen will allow developers to offer apps that move video, pix, or audio, as an instance, from the the front-facing digital camera and rear cameras at the equal time.

iOS 13 Multi-cam assist w/ AVCapture

At its presentation of the brand new characteristic at some stage in WWDC, Apple demoed a image-in-picture video recording app that recorded the person from the the front-going through digital camera at the same time as concurrently recording from the main digital camera.

The demo app also enabled recording of the video and the potential to change among the 2 cameras at the fly for the duration of playback inside the Photos app. The feature can even deliver developers manage over the twin TrueDepth cameras which include separate streams of the Back Wide or Back Telephoto cameras in the event that they pick out.

The new multi-cam characteristic will be supported in iOS 13 for more moderen hardware only such as the iPhone XS, XS Max, XR, and iPad Pro.

Apple indexed a number of supported codecs for multi-cam seize (pictured above), which builders will be aware does impose a few artificial limitations as opposed to the digital camera’s regular capabilities.

Due to electricity constraints on cellular devices, unlike on Mac, iPhones and iPads could be limited to a single consultation of multi-cam, that means you can’t do more than one sessions with more than one cameras or multiple cameras in more than one apps concurrently. There can also be numerous supported tool combos dictating what combination of seize from what cameras are supported on sure devices.

It doesn’t appear that Apple itself is utilizing any new multi-cam functions inside the iOS 13 Camera app, but we’d consider it’s some thing on the horizon now that it’s officially rolling out assist in AVCapture.

Semantic Segmentation Mattes

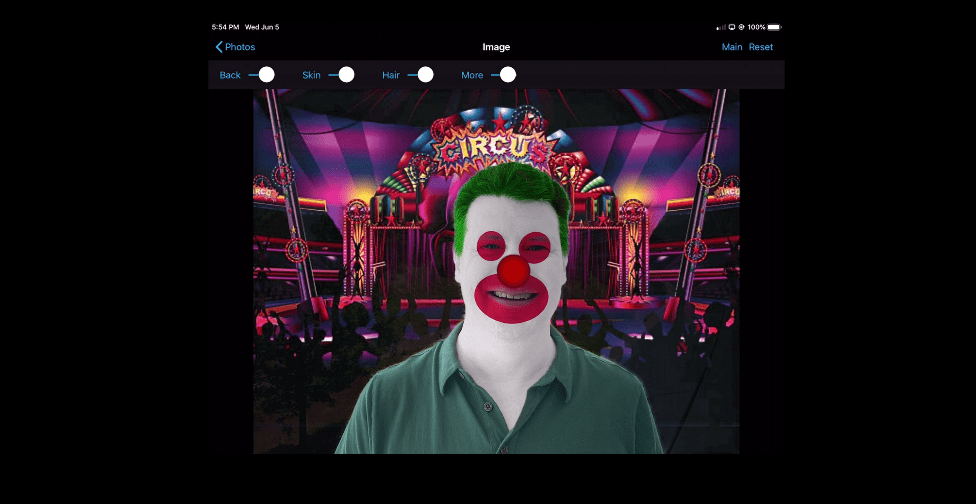

Also new for camera capture in iOS 13 is Semantic Segmentation Mattes. In iOS 12, Apple used something it calls Portrait Effects Matte internally for Portrait Mode photos to separate the subject from the background. In iOS 13, Apple is introducing what it calls Semantic Segmentation Mattes to identity skin, hair, and teeth and improve these maps further with an API for developers to tap into.

In its WWDC session, Apple showed the brand new tech with a demo app that allowed the difficulty in the image to be separated from the historical past and the hair, pores and skin, and tooth to be isolated to without difficulty add results such as face paint and hair shade adjustments (pictured above).

Developers can analyze greater about multi-cam assist and semantic segmentation mattes at Apple’s website where it also has sample code for the demo apps.

Check out 9to5Mac on YouTube for greater Apple news:

Let's block advertisements! (Why?)

//9to5mac.com/2019/06/07/multi-cam-aid-ios13/

2019-06-07 13:thirteen:00Z

52780310078410

0 Response to "Multicam support in iOS 13 allows simultaneous video photo and audio capture 9to5Mac"

Post a Comment